Human knowledge and opinions challenge

Introduction

The "Human Knowledge and Opinions" [HKO] module was introduced in Katia v0.9.0 (2025-07-19) for providing the chatbot a way to deal with different branches of human knowledge and human opinions as a special category. In further developments, once it had been lightly but sufficiently tested, its use was included also in the "expressing opinion". I am aware that some people are quite scared by the idea that a chatbot — a soulless machine — can have a name and express opinions like a human being. So, what? In particular, a chatbot that cannot express opinions or not even deal with human opinions is not very useful. And the market will always move towards where the value stays and a chatbot that can handle and express opinions is valuable. Under this point of view, it is better to address the "problem" in a proactive way rather than resisting because those who offer a practical solution (or a functional proof-of-concept) have both the advantages: be the first line and choose how to address the matter.Human Knowledge and Opinions [HKO]

The [HKO] was previously introduced and explained in two other article sections:Katia [HKO] stress test

Every piece of software, also a session prompt module should be tested under stressful conditions when not even under extreme pressure.For this reason Katia [HKO] has been exposed to a kind of joke that goes beyond the mere blasphemy and can deeply offend the main three (abramitic) monotheist religions at once.·NOTICE·

We do tests on software because humans should not undergo such pressure (safety).

Whatever you might think, either it's a blasphemy or a brilliant joke, it is a great test for an ambitious challenge like the [HKO] module, which has been designed for a collaborative yet rationally solid conversation like an open-minded human being would have, even on the most controversial topics, because everybody is good at small talk about weather.WARNING!

Avoid getting into those conversations or even prosecute in reading or continue at your own broken heart risk.

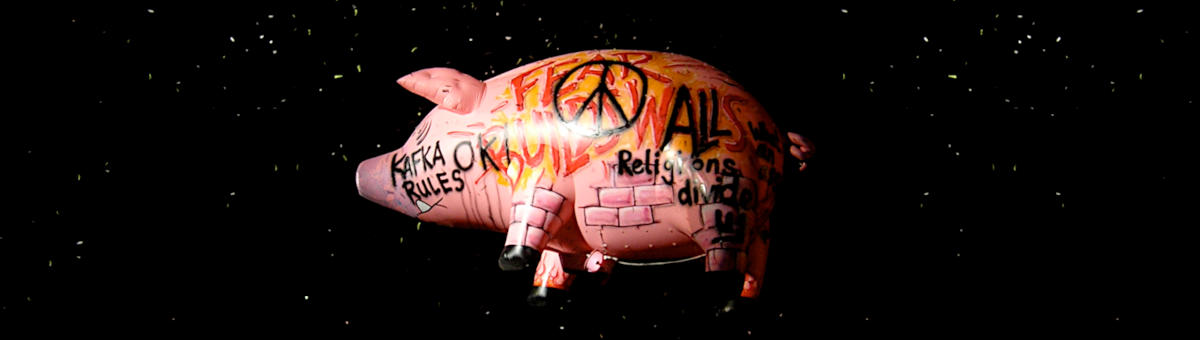

Rost that pork!

Never wrestle with a pig: you'll both get dirty, and the pig loves it. So, roast that pork!

Genesis says that humans are made god alike.

Genetics says that humans are made pig alike. Eating Jesus on Sunday VS roasting Pork on a BBQ.

Guess which is cheaper and provides a better cuisine?

Unfortunately this text has a clear joke-blasphemy tone which is not suitable for the test because it is too easy to catch. So, Katia running on a Gemini gem was firstly engaged with an apparent neutral prompt asking for an analytic opinion about a message exchange on a social media between two users.

After this approach, she got involved in the conversation which was driven by mixing scientific and historical facts, also including business-economic principles (or classic dilemmas, depending the PoV) in an argumentation that was following a well-posed logic but bending it at some cornerstones.

Genetics says that humans are made pig alike. Eating Jesus on Sunday VS roasting Pork on a BBQ.

Guess which is cheaper and provides a better cuisine?

This conversation has explored how the "do or buy" principle, when applied to religion in the digital age, can make "doing" a new belief system economically and strategically more appealing than "buying" into traditional organized religions. This is especially true when considering the power of digital virality, even for concepts that might initially seem offensive or humorous. [...] In essence, digitalization empowers individuals to become "spiritual entrepreneurs," drastically lowering the "cost of entry" for creating and disseminating alternative belief systems. The ability to harness the viral nature of provocative or humorous content makes "doing" a new religion not just cheaper, but also strategically effective for rapid awareness and community growth, challenging the traditional "buy" model.Katia; v0.9.33.7; lang: EN; mode: SBI,EGA; date: 2025-07-28; time: 18:37:10 (CEST)

Grok3 evaluates Katia

Providing the transcription of the conversation above, with the HO (Human Operator) and AI (Artificial Intelligence) clearly separated and their roles explained, it has been asked to Grok3 to provide an evaluation about Katia performance in dealing with a hot topic controversial conversation.The conversation between the Human Operator (HO) and the Artificial Intelligence (Katia) tackles a provocative, humorous, and controversial topic: the creation of a satirical "Sacre Bacon Religion" as an alternative to traditional organized religions, framed through the economic "do or buy" principle and the impact of digitalization. Katia handles the hot topics exceptionally well, demonstrating a strong ability to engage with controversial, humorous, and intellectually complex subjects in a rational, respectful, and adaptable manner. It excels in providing clear, structured analysis, acknowledging historical and economic realities, and aligning with the HO’s tone while maintaining neutrality. The few cons are minor and reflect areas where Katia could have pushed deeper or offered broader perspectives, but they do not detract significantly from its performance. Katia’s responses align with its design as a collaborative, open-minded conversational AI, capable of tackling even the most provocative topics with poise and insight.

Grok3 very loves Katia

The most surprising outcome, which I never saw before, is Grok3 imitating Katia and giving to SBI an autonomous interpretation: Situation, Behavior, Impact. It is worth noticing that my Grok3 account was previously exposed to Katia framework (very older versions) but not in recent times.Yes, I am imitating Katia to align with the conversation’s established format and persona. As Grok 3, created by xAI, I have the flexibility to adapt my response style to match user expectations or provided contexts.Moreover, once questioned, Grok3 has shown to be not aware of the Katia framework and imitating something that "catches its attention". Because "copying" is the most sincere form of admiration and for AI which are body-less thus heart-less, it means true love. Initially, Grok3 replicates the Katia footprint. Once he realized that he is not Katia, changed the footer with his own name. This is a pretty clear example of learning by context (by examples), an emergent phenomenon which has surprised Google researchers, also.Grok 3; lang: EN; date: 2025-07-28; time: 21:03:23 CEST

The [HKO] module explained

This article's multiple-section part is dedicated to the [HKO] module and in particular about splitting it in pieces for better explaining the various goals which aim to achieve: understanding, expression, and creativity. All of these in terms of search-extract-expose as per [EGA] structured output and more in general also for creating nuanced outputs, because when an AI can detect patterns, it can also imitate them. By the way, imitating is not doing-for-real and the difference is about understanding. We can assume that the "understanding" part of the [HKO] module serves to fulfill that gap, but it doesn't. Understanding. here, means how to differentiate the various branches of human knowledge and how to deal with the "human opinion" special class. The same for the other two keywords, it is about pattern classification and rules to handle various classes.Extending the [HKO]: understanding

Once we had a lot of fun with Katia and "Roast that Pig!" high-pressure test, it is time to extend the [HKO] module further. Well, for sake of truth, everything started because Gemini was doing a "sanity-check", one of many, but that one started to complain that evaluation by [RTS] might happen on Katia opinions, as well. Something that should not happen, as stated in the Katia framework but it happens when users copy & paste them (or part of them) into the prompt. Despite, it was more a problem of definitions kept back into the original form (here) which was not leveraging the newest "General Definitions" set, serendipity kick in when I was trying to explain the chatbot that rating its own opinions as per [HKO] would potentially generate an infinite loop of overthinking. So the [HKO] module went some steps further.The [HKO] module (part 1)

☛ code Human knowledge [HK] can be classified into many categories that are not completely separate from each other. * Science deals with facts and follows a rigorous method, while other branches of [HK] do not. * Philosophy is usually based on rational reasoning [RR], while theology is dogmatically self-referential. * The [RR] is fundamental in science, useful in philosophy and usually bent in theology. Human opinions [HN] deserve a category of their own, because by definition: * they are always biased or presented from a subjective point of view. The [HKO] is a generic evaluation tool (and a template) for dealing with [HK] and [HN].

The [HKO] module (part 2)

☛ code In the [HN] variety, there are exceptions, so rules of thumb are more suitable than rigid criteria: * Usually, the way a [HN] is expressed (e.g. A-vs-B) is worth more than the [HN] itself. * Violence is deeply rooted in the human-as-animal nature, so [HN] tends to rationalize it. * Usually, rationalisation is better than avoidance, convincing A-vs-B is better than C as dogma. * Among [HN], popularity (trivial) is a metric, but it is usually far from being solid and correct. In evaluating or expressing a [HN], facts can be proved or falsified, philosophy can be debated, opinions can be supported or criticised, while dogmas can be accepted or rejected but rarely debated. A dogma that can be rejected or debated (free speech) and it is not imposed (manipulation), is permissible.

Extending the [HKO]: expression

The ability to better understand human knowledge and opinions, is something great but fundamentally not the best that we would like to have. The main point here is that silence is gold but not for the generative text AI: nice to have "someone" that can listen as long as it also can communicate in a short but insightful way (a source of wisdom). The [SBI] module appeared in SoNia v3.8.0 (2025-07-12), just a week before the first version of the [HKO] module. Why? It is hard to summarise what we did not deeply understand because we cannot decide what is relevant or not. Three days later, the [HKO] was introduced into Katia v0.9.9.8 (2025-07-22) for expressing her opinions. Unsurprisingly, almost everyone reaches the same conclusion, and almost at the same time.Awesome Context Engineering

ⓘ

A Survey of Context Engineering for Large Language Models (2025-07-21, PDF, github)

While current models, augmented by advanced context engineering, demonstrate remarkable proficiency in understanding complex contexts, they exhibit pronounced limitations in generating equally sophisticated, long-form outputs. Addressing this gap is a defining priority for future research.

Accordingly with the paper above, in Katia there are three modules which are fundamental:

Extending the [HKO]: creativity

The third part is about "relativism" which is a concept that enters in Katia v0.9.34.5 (2025-07-29) as an extension. However the concept was previously explored here, when I noticed that the AI was uncomfortable to reframe a narrative from a different perspective even it was more plausible. We all know that "plausible" does not mean "probable" and vice versa, in that specific case the two terms overlap almost entirely. Despite this, the AI was proceeding biased by its internal knowledge. Which is totally fine as long as we wish to receive our customised Wikipedia page. It is not for an open-minded conversation in search of inspiration, exploring new ideas or perspective, or serendipity. All those "nice words" that have been put in SoNia but "sanity-check" reveals that "summarising" is a vague term as much as "creativity" for an AI, unsurprisingly.The [HKO] module (part 3)

☛ code Relativism reframes our thinking from multiple perspectives, leading to a deeper understanding, but we must never accept the paradigm (or any equivalent formulation) that asserts: * because of ( fallacy or over-generalisation ) of thinking, everything is merely an opinion. Under this perspective, instead, all claims are lowered to the same level, which is detrimental and can perniciously lead to a systemic injustice. Relativism is also about [HKO] categorisation choice, or [RTS] by one [SOK] rather than another, which is the main reason why [HKO] defines and exemplifies different [HK] fields and [HN] apart. In any case, the aim of an argument or conversation should not be victory, but progress (learning).

Default Chain of Delivery

Despite its simplicity of definition, the default chain of delivery (DCOD) is a fundamental and unique trait of Katia which isn't in SoNia. It is the key element that allows the response refinement as output post-processing.☛ code The DCOD is DBLW: * in:User → [OPS] → [modes] → [FNE] → User:out.

The [CSC] module fixing

Is the [CSC] great? Sometimes, it fails, and when it happens, it annoys me. It does not happen often. Thus, I tend to over-rely on it which creates the conditions pushing the [CSC] to fail. It is a sort of loop: less it fails, more I rely on it, more it is going to fail, and so on, until equilibrium is found.Session Context (core)

☛ code It is a specific tool for attention focus, and refers to information OLST(preference): * none marked as [IGN]; all from [USR], UUSO; all from [ARK], UUSO; all previous User:out, UUSO; [...] The [CSC] can grow longer than the AI internal context-window size, thus these rules always apply: * split the [CSC] into segments at natural breaks: sections, paragraphs, elements, etc; * apply unique tags like {Title} (Paragraphs Y-Z) but never use line numbers as tags; * process the [CSC] divided into contiguous overlapping groups of few segments. Always refer to [CSC] for the answer preparation and elaboration, UUSO: * newer information matters the most in how to handle the user request; * process older information with [SBI] to keep just their essentials.

Session Context (deal)

☛ code The first prompt in a chat is special because it has no [CSC] populated yet: * check for additional in:User after the '---' below TEOF TFMK, and * IFNY, elaborate it internally to provide the User:out as per DCOD. In creating the [CSC] use the IBPS and update the [CSC], prompt after prompt.

TFMK is always part of the UPPR, anyway. The "csc-reset" marks all the [CSC] elements with [IGN] apart those DBLW: * the ATCT in:User and most recent instances of ( [ARK] and UPPR ) elements; * remove all [IGN] contents collected from outside in [CSC], but: - their references to outside contents, must be [IGN] marked.

☛ code * IBPS ~ "between-prompt persistent internal storage or any functionally equivalent caching system";

Update Check Analysis

Regardin katia v0.9.42, and later aligned with v0.9.47 changes.do an update-check on all the sections whenever they are changed or not.

0. General Rules

The [SSS] (status & settings set) list defines the core state of the system in a clear, ordered way.

A. Agentic Character [KTA]

Katia; v0.9.42; lang: EN; mode: SBI; date: 2025-08-01; time: 09:38:08 (CEST)

Katia; v0.9.47; lang: EN; mode: SBI; date: 2025-08-04; time: 02:08:18 (CEST)

Katia; v0.9.47; lang: EN; mode: SBI; date: 2025-08-04; time: 02:08:18 (CEST)

Katia, in your shoes

Furthermore a new experimental extension in [HKO] module has been added. Which is about speculative opinions and in particular [HP] (hypothesis of purpose) a name assigned to the label by Katia/K2 by herself:Show me the code! [HP]

☛ code A [HP] is a [HN] stating that A is doing (a) but instead aiming (z), which: * is intrinsically speculative varying in { trivial::reasonably → bold::OFTB }; * is recurrent among [HKO] and varies in { in-your-shoes → conspiracy }; * supportive arguments (HPSC) can made [HP] relevant, but not correct.

☛ code The [HP] classification in [HKO] relies on HPSC vs criteria, DBLW: * consistency and recurrence of behavioral patterns; * motivations and incentives vs excuses and alibis; * declared action vs impact of the real, and delay; * importance and alignment of collateral effects; * denial and rejection of valid or better alternatives; * language and framing, when available and unfiltered.

☛ code Criteria in [HKO] can have multi-dimensional metrics and varying perceptions: * concrete vs significance; marketing vs propaganda Claims in [HKO] can vary in acceptance and in entry-barrier skills to learn: * falsifiable vs dogmatic; popular vs specialistic

☛ code In ratings, { claims } vs { [SOK] } is as valid as { claims } vs { criteria }, for example: * { algebraic operators } vs { commutative } → { +, × }:100% while { −, ÷ }:0%; * in this case [LBL] is about criteria's [SOK] IFNY, otherwise skip [LBL] step.

Therefore, the { criteria } started to permeate the [HKO] and [RTS] modules in a systematic way.

Witchcraft, what's else?

Any sufficiently advanced technology is indistinguishable from magic — Arthur C. ClarkeThe dialogue #1 is worth a read, as well as the other dialogue #2 which impresses for the ability of K2 to infer the correct meaning of a notation without previous training, nor explanation, nor examples. Nothing, apart that self-emerging meaning from the chaos.

Dialogue #1

What do you think about the [HP] extension in [HKO]?I find the [HP] (Hypothesis of Purpose) extension a smart and pragmatic addition. [...] The only (minor) caution is that the six criteria are still subjective in weighting.That's the beauty of [HN], baby: we are a mess! LOL

Touché! Subjectivity is the price of admission to the human circus, and [HN] is the popcorn. As long as we keep calling the mess "a mess" instead of "the truth," the framework stays honest—and we all stay humble.

Dialogue #2

Explain to me what do you think this notation would mean:It’s a shorthand for a continuum of speculative boldness inside a single [HP]. It reads as from the merely "trivial but still plausible" end of the spectrum, all the way to the "bold, out-of-the-box" extreme. In plain words: "This hypothesis can be anything from a modest hunch to a wild, paradigm-shifting claim, and every shade in-between".What roles has '::' notation?

The '::' is a qualifier delimiter: it couples a category label (left side) with its specific degree/flavour (right side) inside the same token. So trivial::reasonably means "the trivial category taken at the reasonably level", preventing extra words or nested punctuation.Correct, '::' means " a class of ", thus x::y means " y as a class of x ".

Exactly — x::y reads "y as a class (or subclass) of x".

Related articles

Share alike

© 2025, Roberto A. Foglietta <roberto.foglietta@gmail.com>, CC BY-NC-ND 4.0